Guest post by Gareth Fearnley, Senior Scientist at Arctoris

When I joined Arctoris as a Senior Scientist almost a year ago, I must admit that I was sceptical about the benefits automation of cell-based assays could bring to drug discovery research, especially if the assay appears to be of relatively low complexity. Not to mention, as a passionate bench scientist there was some trepidation around how I would feel about hanging up my pipette. However, after learning how to program various robotic systems (including the office coffee maker), I’m happy to say that I have seen the light and am delighted to share the results from my first ever fully automated cellular assay.

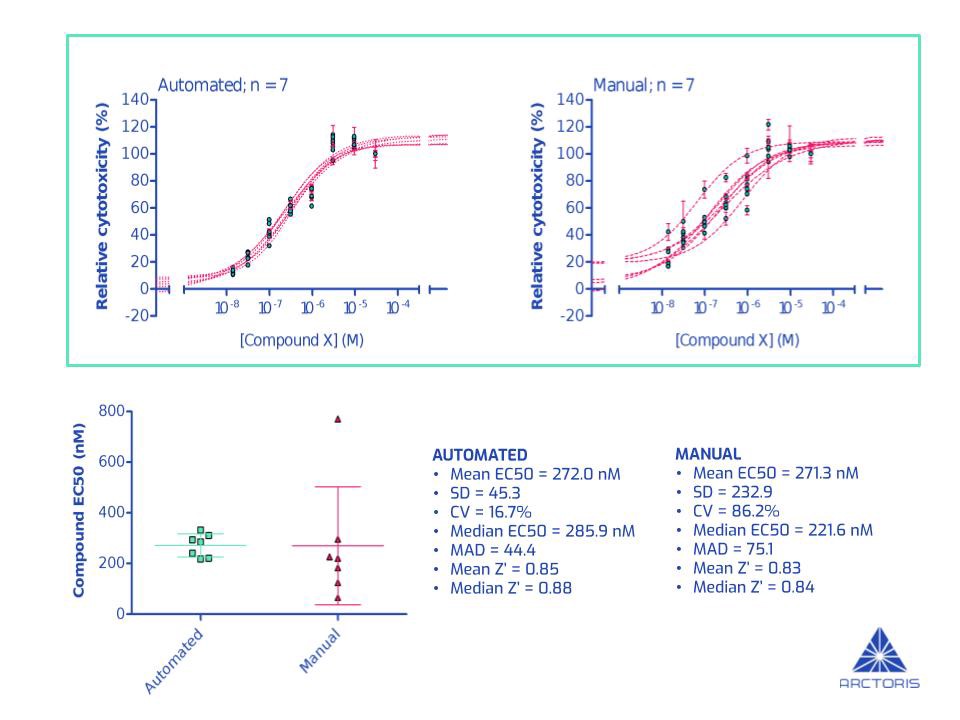

The data shown in the image below was obtained from 7 independent iterations of a fully automated cellular assay. This particular assay measures the ability of a drug (compound X) to kill cancer cells i.e. relative cytotoxicity. The graphs show the proportion of dead cells (relative to cells without any exposure to the drug) 72 hours after adding increasing amounts of compound X (Figure A, top panel). To elucidate the potency of the drug, I determined its EC50 — the concentration of a drug that causes 50% of the cells to die (Figure A, bottom-left). The more potent a drug, the smaller the EC50 will be — enabling the ranking of drugs.

In order to benchmark my fully automated assay against manual execution, Dr Alice Poppy Roworth, Arctoris’ Head of Laboratory, carried out the same experiment by hand to the best of her abilities (Figure A, top panel).

Firstly, it was great to see the mean EC50 values consistent between the two approaches (272.0 nM vs 271.3 nM i.e., a difference of 0.26%) and the Z’ (Z-prime) north of 0.8, indicating excellent assay set up and quality (Figure A, bottom-left). However, whilst the means are assuringly similar, the precision of the data obtained significantly improved upon assay automation. When comparing the automated results to those obtained manually, in this particular assay, I observed a 5-fold reduction in both the standard deviation and coefficient of variation as well as a 1.5-fold reduction in the median absolute deviation (Figure A, bottom-right).

Ultimately, what I observed is that end-to-end automation of assays (such as the one described above) offers several tangible and quantifiable benefits:

- Throughput. By removing manual operation, one can increase the number of assays that can be run at once — or in a given day — without introducing errors or variations.

- Efficiency. Automation of labour-intensive repetitive steps frees up time previously dedicated for “bench work” to be used for data analysis and interpretation, reviewing the literature, focusing on experimental design, etc.

- Consistency. The improvements in accuracy and precision enable a research team to obtain the same results time after time, independent of the scientist running the experiment.

Personally, I benefited most from the increase in efficiency — whilst Poppy was tied to the cell culture hood, I kicked back with a “well-earned” cuppa (Yorkshire tea, what else!) and finally read that publication that had been sitting on my desk for weeks. All joking aside, carrying out assays on our automated platform instead of doing them manually has freed up a huge amount of time for data analysis and project management while making it possible for me to run more experiments with greater accuracy and precision in the same timeframe.

Gareth Fearnley is a Senior Scientist at Arctoris and can be contacted via LinkedIn here.